Self-hosted Kubernetes homelab with K3s, Flux GitOps and ngrok

2023-07-27 | kubernetes, infrastructure, devops

Purpose #

Cloud infrastructure is nice and all, but sometimes you just want a simple kubernetes cluster to play around with as cheaply as possible, taking advantage of some hardware you already have laying around.

This article describes a setup that:

- Costs nothing if you already have the hardware (except your existing internet and electricity bills)

- Runs probably on any machine with Ubuntu

- Gives you a real (

k3s) Kubernetes environment that…

- can expose services to the public internet

- can be turned off easily without any hassle or losing data

- can be turned on with services up within ~2 minutes

- Enables continuous deployments and GitOps with Flux

The instructions are relatively high level and may require special tuning for your setup. Further reading on some of the components is also encouraged. A sample repository is available here.

Key components and how they work #

Why Flux?

Flux is a GitOps toolkit for continuous deployments.

GitOps #

There are several advantages to GitOps, but in this scenario we benefit the most from automatic pull-based deployments (see below for more detail on that) and having a declarative approach to managing the contents of the cluster.

Especially if you play around a lot with different applications that you install and remove from your cluster, it is easy to forget what’s actually there if you come back to it months later. When the contents of the cluster are declared in your git repo, there is no confusion on the state of the cluster thanks to continuous reconciliation.

Flux gives us pull-based deployments (instead of push-based) #

Despite using Ngrok, our cluster still has no public Kubernetes API endpoint. This means that a GitHub Actions workflow for example cannot run kubectl apply commands against our cluster.

This is great for security, and for this reason many tightly-controlled environments anyway use pull-based deployments. Flux handles this for us nicely.

How does Flux work? #

There are a lot of components to Flux, but the easiest way to think about it for our scenario here is like this:

| Image controller | Source controller |

|---|---|

| A tiny app that: - Continuously checks a desired container registry for new image tags - If a new tag is found, updates a kubernetes manifest in a desired GitHub repository with this new container image | A tiny app that: - Continuously pulls the latest manifests from a desired repository - “Reconciles” the state by applying these manifests to the cluster. |

Thinking about this from a deployment perspective, the image controller will push commits like this to your repo that update a container tag:

-- image: ghcr.io/antvirf/example-image:11

++ image: ghcr.io/antvirf/example-image:12

The next time the source controller pulls the repo, it sees a file has changed with this new container tag, and applies this to the cluster.

Why ngrok?

One of the main challenges of a self-hosted homelab-style setup is exposing services - let’s say a website - to the public internet. Most internet service providers allocate regular users dynamic IPs that change time to time, so pointing domain name records to our own IPs is problematic. Beyond this your local home router will need some adjustment to open or forward particular ports to reach your servers, and this process tends to be manual and annoying, as well as potentially problematic from a security perspective.

ngrok solves this problem nicely by creating a tunnel from your local machine to an edge network managed by ngrok. DNS and certificates are managed for you, and the connection lasts only as long as the tunnel is open - shutting down the service closes the tunnel. Just about a month ago, ngrok announced their own ngrok kubernetes ingress controller, which brings this functionality to kubernetes. Services in the cluster can now be exposed cleanly via ngrok tunnels without the need of figuring out how to make load balancers work on your local machine.

Networking and access pattern with k3s and ngrok ingress controller #

Setup #

Step 0: Preparation #

- Hardware: The guide assumes you have a machine with a recent installation of Ubuntu.

- ngrok: You need to sign up for a free ngrok account and claim your free domain name.

Step 1: Install K3s #

K3s doesn’t need much configuration; we just pass the option to disable Traefik as we will be installing our own ngrok-based ingress controller instead.

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="server --disable traefik" sh

Step 2: Set up Flux #

The Flux installation here is also very much standard, following the instructions provided by the project. The below provides a quick summary, assuming you already have the Flux CLI installed. You also need a GitHub access token.

# Set up your GH token

export GITHUB_TOKEN=<your-token>

# Bootstrap Flux - customise first 3 arguments to your setup

flux bootstrap github \

--owner=your-github-username \

--repository=your-repo-name \

--path=clusters/homelab \

--private=true --personal=true \

--components-extra=image-reflector-controller,image-automation-controller \ # you want these for automating deployments

--read-write-key # this is needed so Flux can update your repo

Optional step: Install sealed-secrets

#

In order to do things the “GitOps” way, your repository needs to be able to declaratively define the secrets your setup needs. The ngrok ingress controller will need your ngrok access token in order to communicate with their network, and likely you will also need to store credentials to a container registry such as GHCR.

A more ‘advanced’ setup may to use something like

external-secrets and store the actual values in e.g. AWS Secrets Manager, but for a simple setup

sealed-secrets works nicely. It encrypts secrets using a private key known only by your cluster, so that they can be safely committed in Git. The below expand contains the Flux manifests to deploy sealed-secrets.

Please follow the instructions provided by sealed-secrets as the service consists of both a client-side as well as a cluster-side component. The manifests below install the cluster-side components for you with Flux. You can also refer to the example repository, which contains the

application definition,

flux deployment manifest, as well as a

makefile-based utility to help with creating sealed secrets conveniently.

Open application definition manifests

---

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: HelmRepository

metadata:

name: sealed-secrets

namespace: flux-system

spec:

interval: 10m0s

url: https://bitnami-labs.github.io/sealed-secrets

---

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: sealed-secrets

namespace: flux-system

spec:

chart:

spec:

chart: sealed-secrets

reconcileStrategy: ChartVersion

sourceRef:

kind: HelmRepository

name: sealed-secrets

version: 2.11.0

interval: 10m0s

Step 3: Install ngrok ingress controller with Flux #

Installation of ngrok ingress controller can also be done via helm as shown below. Application definition manifests as well as flux deployment manifests are available in the example repository.

Open application definition manifests

---

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: HelmRepository

metadata:

name: ngrok-ingress-controller

namespace: flux-system

spec:

interval: 10m0s

url: https://ngrok.github.io/kubernetes-ingress-controller

---

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: ngrok-ingress-controller

namespace: flux-system

spec:

chart:

spec:

chart: kubernetes-ingress-controller

reconcileStrategy: ChartVersion

sourceRef:

kind: HelmRepository

name: ngrok-ingress-controller

version: 0.10.0

interval: 10m0s

values:

credentials:

secret:

name: ngrok-ingress-controller-credentials

Step 4: Install your applications #

Depending on how you configured Flux, you will need to set up deployment manifests in the right folder - in the example case in ./clusters/homelab/.

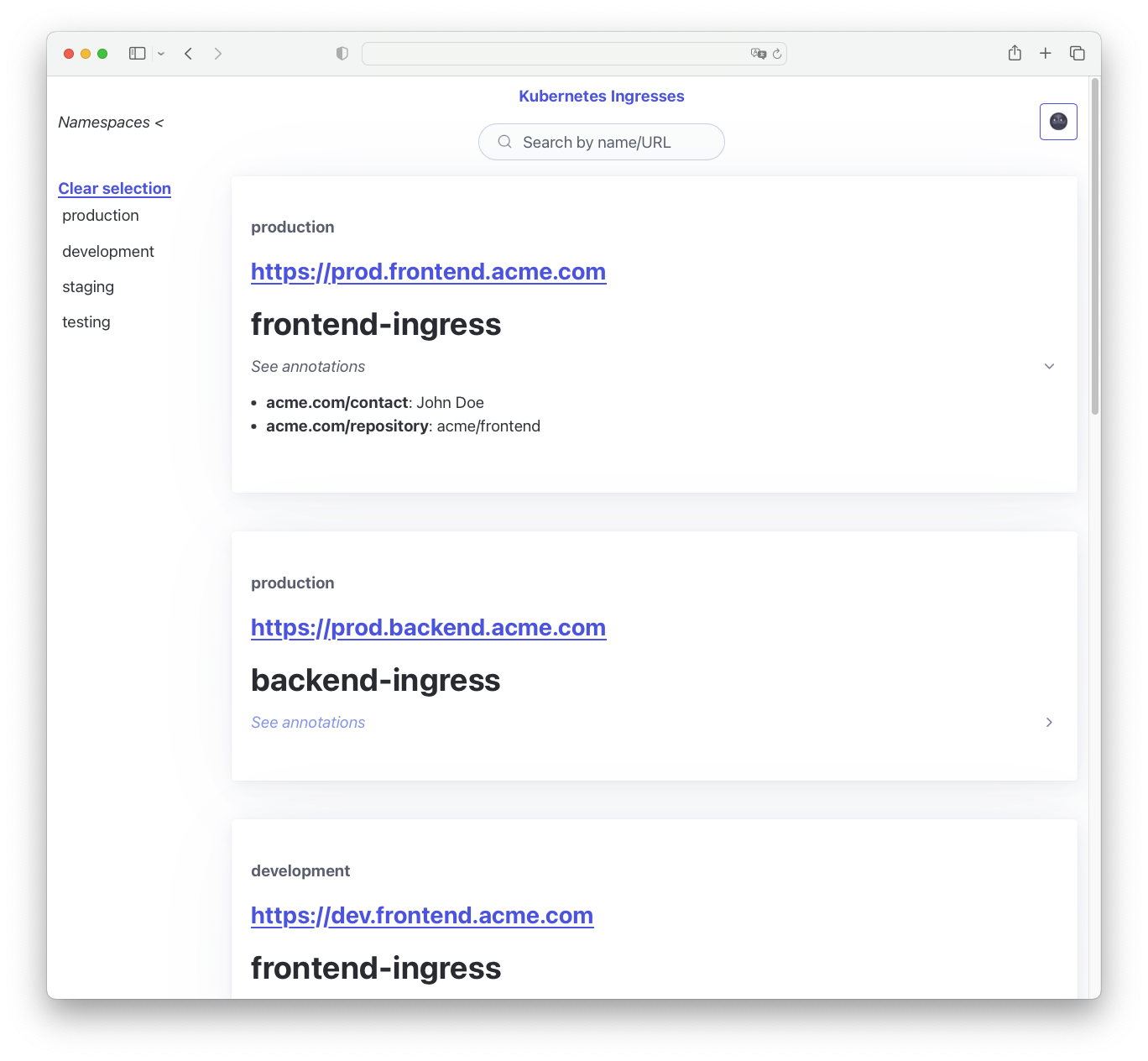

This step is entirely specific to what you want to install and how you wish to go about it. The example repository uses my kube-ingress-dashboard project.

Step 5: Expose the application with an ngrok ingress #

The ingress objects expected by the ngrok ingress controller are relatively standard; the only thing you need to pay special attention to is to set the ingressClassName to ngrok, and provide your free ngrok domain name as host.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-app

spec:

ingressClassName: ngrok

rules:

- host: YOUR_URL # replace this value with your ngrok domain name

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-app

port:

number: 8000

After giving ngrok a second to synchronise, you should now be able to reach your application from your ngrok domain 😎